Also of course if probabilistic error is not on critical path you do not have to worry.

Once we had a web service here customer was asking if they send us some users.

Think they are sending 10000-20000 users on every batch,and doing so frequent.

If I hit database and check if user exists, i will query for thousand times.

If I make a cache on memory, i need to make a hashmap with item size in database.

Say I have 50K user. Cache will be 50K x user name lets say.

In Bloom Filter instead of caching every time,we compute one projected value for a hash function

and put it in a bit array we occupy much much less space and get incredible performance.

And if we use a few hash functions at one time,we get more accurate results.

This algorithm always tell exact if item exists in cache.

If it says,not in cache, there is a probability it is saying wrong.

For my scenario it is not causing any problem but maybe one unnecessary database hit.

For example I am showing my cache for Country names.

It has 227 items.

Check below address for best parameters.

http://hur.st/bloomfilter?n=227&p=0.01

For our sample parameters are below.

n = 227, p = 0.01 (1 in 100) → m = 2,176 (272B), k = 7

With only 272 Byte and 7 hash functions we can get %1 error rate.

import java.util.BitSet;

import java.util.Random;

import com.sangupta.murmur.Murmur1;

import com.sangupta.murmur.Murmur2;

import com.sangupta.murmur.Murmur3;

public class BloomFilterCache {

public static String[] COUNTRIES = new String[] { "Afghanistan ", "Albania ", "Algeria ", "American Samoa ",

"Andorra ", "Angola ", "Anguilla ", "Antigua & Barbuda ", "Argentina ", "Armenia ", "Aruba ", "Australia ",

"Austria ", "Azerbaijan ", "Bahamas, The ", "Bahrain ", "Bangladesh ", "Barbados ", "Belarus ", "Belgium ",

"Belize ", "Benin ", "Bermuda ", "Bhutan ", "Bolivia ", "Bosnia & Herzegovina ", "Botswana ", "Brazil ",

"British Virgin Is. ", "Brunei ", "Bulgaria ", "Burkina Faso ", "Burma ", "Burundi ", "Cambodia ",

"Cameroon ", "Canada ", "Cape Verde ", "Cayman Islands ", "Central African Rep. ", "Chad ", "Chile ",

"China ", "Colombia ", "Comoros ", "Congo, Dem. Rep. ", "Congo, Repub. of the ", "Cook Islands ",

"Costa Rica ", "Cote d'Ivoire ", "Croatia ", "Cuba ", "Cyprus ", "Czech Republic ", "Denmark ", "Djibouti ",

"Dominica ", "Dominican Republic ", "East Timor ", "Ecuador ", "Egypt ", "El Salvador ",

"Equatorial Guinea ", "Eritrea ", "Estonia ", "Ethiopia ", "Faroe Islands ", "Fiji ", "Finland ", "France ",

"French Guiana ", "French Polynesia ", "Gabon ", "Gambia, The ", "Gaza Strip ", "Georgia ", "Germany ",

"Ghana ", "Gibraltar ", "Greece ", "Greenland ", "Grenada ", "Guadeloupe ", "Guam ", "Guatemala ",

"Guernsey ", "Guinea ", "Guinea-Bissau ", "Guyana ", "Haiti ", "Honduras ", "Hong Kong ", "Hungary ",

"Iceland ", "India ", "Indonesia ", "Iran ", "Iraq ", "Ireland ", "Isle of Man ", "Israel ", "Italy ",

"Jamaica ", "Japan ", "Jersey ", "Jordan ", "Kazakhstan ", "Kenya ", "Kiribati ", "Korea, North ",

"Korea, South ", "Kuwait ", "Kyrgyzstan ", "Laos ", "Latvia ", "Lebanon ", "Lesotho ", "Liberia ", "Libya ",

"Liechtenstein ", "Lithuania ", "Luxembourg ", "Macau ", "Macedonia ", "Madagascar ", "Malawi ",

"Malaysia ", "Maldives ", "Mali ", "Malta ", "Marshall Islands ", "Martinique ", "Mauritania ",

"Mauritius ", "Mayotte ", "Mexico ", "Micronesia, Fed. St. ", "Moldova ", "Monaco ", "Mongolia ",

"Montserrat ", "Morocco ", "Mozambique ", "Namibia ", "Nauru ", "Nepal ", "Netherlands ",

"Netherlands Antilles ", "New Caledonia ", "New Zealand ", "Nicaragua ", "Niger ", "Nigeria ",

"N. Mariana Islands ", "Norway ", "Oman ", "Pakistan ", "Palau ", "Panama ", "Papua New Guinea ",

"Paraguay ", "Peru ", "Philippines ", "Poland ", "Portugal ", "Puerto Rico ", "Qatar ", "Reunion ",

"Romania ", "Russia ", "Rwanda ", "Saint Helena ", "Saint Kitts & Nevis ", "Saint Lucia ",

"St Pierre & Miquelon ", "Saint Vincent and the Grenadines ", "Samoa ", "San Marino ",

"Sao Tome & Principe ", "Saudi Arabia ", "Senegal ", "Serbia ", "Seychelles ", "Sierra Leone ",

"Singapore ", "Slovakia ", "Slovenia ", "Solomon Islands ", "Somalia ", "South Africa ", "Spain ",

"Sri Lanka ", "Sudan ", "Suriname ", "Swaziland ", "Sweden ", "Switzerland ", "Syria ", "Taiwan ",

"Tajikistan ", "Tanzania ", "Thailand ", "Togo ", "Tonga ", "Trinidad & Tobago ", "Tunisia ", "Turkey ",

"Turkmenistan ", "Turks & Caicos Is ", "Tuvalu ", "Uganda ", "Ukraine ", "United Arab Emirates ",

"United Kingdom ", "United States ", "Uruguay ", "Uzbekistan ", "Vanuatu ", "Venezuela ", "Vietnam ",

"Virgin Islands ", "Wallis and Futuna ", "West Bank ", "Western Sahara ", "Yemen ", "Zambia ",

"Zimbabwe " };

private BitSet bitset;

int[] hashSeeds;

int noHashFunctions;

public boolean useSeed = true;

public int murmurVersion = 1;

public int getSeed(int i) {

if (useSeed)

return hashSeeds[i];

else

return i;

}

public BloomFilterCache(int slots, int hashFunctions) {

bitset = new BitSet(slots);

noHashFunctions = hashFunctions;

Random r = new Random(System.currentTimeMillis());

hashSeeds = new int[hashFunctions];

for (int i = 0; i < hashFunctions; ++i) {

hashSeeds[i] = r.nextInt();

}

}

public int getHash(String value, int i) {

if (murmurVersion == 1)

return (int) Murmur1.hash(value.getBytes(), 4, getSeed(i));

else if (murmurVersion == 2)

return (int) Murmur2.hash(value.getBytes(), 4, getSeed(i));

else

return (int) Murmur3.hash_x86_32(value.getBytes(), 4, getSeed(i));

}

public void add(String value) {

for (int i = 0; i < noHashFunctions; ++i) {

int h = getHash(value, i);

bitset.set(Math.abs(h) % bitset.size(), true);

}

}

public boolean contains(String value) {

for (int i = 0; i < noHashFunctions; ++i) {

int h = getHash(value, i);

if (!bitset.get(Math.abs(h) % bitset.size())) {

return false;

}

}

return true;

}

public static String generateRandomChars(String candidateChars, int length) {

StringBuilder sb = new StringBuilder();

Random random = new Random();

for (int i = 0; i < length; i++) {

sb.append(candidateChars.charAt(random.nextInt(candidateChars.length())));

}

return sb.toString();

}

public int getErrorCount(String[] testSet, String postFix, String prefix) {

int error = 0;

for (String s : testSet) {

if (contains(generateRandomChars("ABCDEFGHIJKLMNOPQRSTUVWXYZ".toLowerCase(), 3) + s + "")) {

error++;

}

}

return error;

}

public int getErrorCountRandom(int testSize) {

int error = 0;

for (int i = 0; i < testSize; i++) {

String test = generateRandomChars("ABCDEFGHIJKLMNOPQRSTUVWXYZ".toLowerCase(), 10);

if (contains(test) ) {

error++;

//System.err.println(test);

}

}

return error;

}

public static void testFor(int slots, int hashFunctions, boolean useSeed, int murmurVersion) {

BloomFilterCache bf = new BloomFilterCache(slots, hashFunctions);

bf.useSeed = useSeed;

bf.murmurVersion = murmurVersion;

for (String s : COUNTRIES) {

bf.add(s);

}

System.err.println("*****Params : slots = " + slots + " no#hash = " + hashFunctions + " cardinality = "

+ bf.bitset.cardinality() + " useSeed = " + useSeed + " murmurVersion = " + murmurVersion);

System.err.println("Query for Japan: " + bf.contains("Japan"));

System.err.println("Query for Dummy: " + bf.contains("Dummy"));

System.err.println("Error Count: " + bf.getErrorCount(COUNTRIES, "", ""));

System.err.println("Error Count Prefix: " + bf.getErrorCount(COUNTRIES, "abc", ""));

System.err.println("Error Count Postfix: " + bf.getErrorCount(COUNTRIES, "", "abc"));

System.err.println("Error Count Post-Pre: " + bf.getErrorCount(COUNTRIES, "abc", "def"));

System.err.println("Error Count Random: " + bf.getErrorCountRandom(COUNTRIES.length));

}

public static void main(String[] args) {

int[] versions = new int[] { 1, 2, 3 };

boolean[] useSeeds = new boolean[] { true, false };

for (int v : versions) {

System.err.println(" ---------------------- ");

for (boolean useSeed : useSeeds) {

testFor(10000, 7, useSeed, v);

testFor(2224, 2, useSeed, v);

testFor(2224, 7, useSeed, v);

testFor(2224, 17, useSeed, v);

testFor(582, 3, useSeed, v);

}

}

}

}

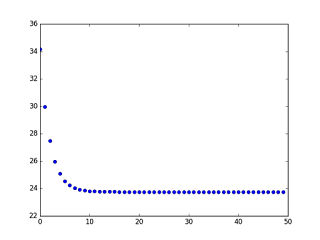

Below are multiple tests with multiple parameters .

You can check effects by changing parameters.

97 97 3104 96321 ---------------------- *****Params : slots = 10000 no#hash = 7 cardinality = 1336 useSeed = true murmurVersion = 1 Query for Japan: true Query for Dummy: false Error Count: 0 Error Count Prefix: 0 Error Count Postfix: 0 Error Count Post-Pre: 0 Error Count Random: 0 *****Params : slots = 2224 no#hash = 2 cardinality = 369 useSeed = true murmurVersion = 1 Query for Japan: true Query for Dummy: false Error Count: 10 Error Count Prefix: 6 Error Count Postfix: 4 Error Count Post-Pre: 4 Error Count Random: 2 *****Params : slots = 2224 no#hash = 7 cardinality = 1067 useSeed = true murmurVersion = 1 Query for Japan: true Query for Dummy: false Error Count: 3 Error Count Prefix: 3 Error Count Postfix: 1 Error Count Post-Pre: 2 Error Count Random: 1 *****Params : slots = 2224 no#hash = 17 cardinality = 1755 useSeed = true murmurVersion = 1 Query for Japan: true Query for Dummy: false Error Count: 3 Error Count Prefix: 4 Error Count Postfix: 5 Error Count Post-Pre: 3 Error Count Random: 2 *****Params : slots = 582 no#hash = 3 cardinality = 402 useSeed = true murmurVersion = 1 Query for Japan: true Query for Dummy: false Error Count: 61 Error Count Prefix: 57 Error Count Postfix: 53 Error Count Post-Pre: 58 Error Count Random: 54 *****Params : slots = 10000 no#hash = 7 cardinality = 1308 useSeed = false murmurVersion = 1 Query for Japan: true Query for Dummy: false Error Count: 0 Error Count Prefix: 0 Error Count Postfix: 0 Error Count Post-Pre: 0 Error Count Random: 0 *****Params : slots = 2224 no#hash = 2 cardinality = 371 useSeed = false murmurVersion = 1 Query for Japan: true Query for Dummy: false Error Count: 10 Error Count Prefix: 9 Error Count Postfix: 7 Error Count Post-Pre: 7 Error Count Random: 5 *****Params : slots = 2224 no#hash = 7 cardinality = 1034 useSeed = false murmurVersion = 1 Query for Japan: true Query for Dummy: false Error Count: 0 Error Count Prefix: 2 Error Count Postfix: 1 Error Count Post-Pre: 2 Error Count Random: 0 *****Params : slots = 2224 no#hash = 17 cardinality = 1721 useSeed = false murmurVersion = 1 Query for Japan: true Query for Dummy: false Error Count: 2 Error Count Prefix: 3 Error Count Postfix: 4 Error Count Post-Pre: 3 Error Count Random: 1 *****Params : slots = 582 no#hash = 3 cardinality = 378 useSeed = false murmurVersion = 1 Query for Japan: true Query for Dummy: false Error Count: 49 Error Count Prefix: 42 Error Count Postfix: 47 Error Count Post-Pre: 33 Error Count Random: 45 ---------------------- *****Params : slots = 10000 no#hash = 7 cardinality = 1339 useSeed = true murmurVersion = 2 Query for Japan: true Query for Dummy: false Error Count: 0 Error Count Prefix: 0 Error Count Postfix: 0 Error Count Post-Pre: 0 Error Count Random: 0 *****Params : slots = 2224 no#hash = 2 cardinality = 382 useSeed = true murmurVersion = 2 Query for Japan: true Query for Dummy: false Error Count: 9 Error Count Prefix: 6 Error Count Postfix: 6 Error Count Post-Pre: 3 Error Count Random: 4 *****Params : slots = 2224 no#hash = 7 cardinality = 1069 useSeed = true murmurVersion = 2 Query for Japan: true Query for Dummy: false Error Count: 1 Error Count Prefix: 3 Error Count Postfix: 1 Error Count Post-Pre: 1 Error Count Random: 1 *****Params : slots = 2224 no#hash = 17 cardinality = 1780 useSeed = true murmurVersion = 2 Query for Japan: true Query for Dummy: false Error Count: 3 Error Count Prefix: 3 Error Count Postfix: 4 Error Count Post-Pre: 3 Error Count Random: 8 *****Params : slots = 582 no#hash = 3 cardinality = 386 useSeed = true murmurVersion = 2 Query for Japan: true Query for Dummy: false Error Count: 50 Error Count Prefix: 43 Error Count Postfix: 48 Error Count Post-Pre: 40 Error Count Random: 53 *****Params : slots = 10000 no#hash = 7 cardinality = 1348 useSeed = false murmurVersion = 2 Query for Japan: true Query for Dummy: false Error Count: 0 Error Count Prefix: 0 Error Count Postfix: 0 Error Count Post-Pre: 0 Error Count Random: 0 *****Params : slots = 2224 no#hash = 2 cardinality = 383 useSeed = false murmurVersion = 2 Query for Japan: true Query for Dummy: false Error Count: 11 Error Count Prefix: 5 Error Count Postfix: 6 Error Count Post-Pre: 8 Error Count Random: 5 *****Params : slots = 2224 no#hash = 7 cardinality = 1063 useSeed = false murmurVersion = 2 Query for Japan: true Query for Dummy: false Error Count: 0 Error Count Prefix: 2 Error Count Postfix: 1 Error Count Post-Pre: 1 Error Count Random: 2 *****Params : slots = 2224 no#hash = 17 cardinality = 1749 useSeed = false murmurVersion = 2 Query for Japan: true Query for Dummy: false Error Count: 1 Error Count Prefix: 3 Error Count Postfix: 4 Error Count Post-Pre: 1 Error Count Random: 3 *****Params : slots = 582 no#hash = 3 cardinality = 408 useSeed = false murmurVersion = 2 Query for Japan: true Query for Dummy: false Error Count: 60 Error Count Prefix: 61 Error Count Postfix: 49 Error Count Post-Pre: 47 Error Count Random: 60 ---------------------- *****Params : slots = 10000 no#hash = 7 cardinality = 1322 useSeed = true murmurVersion = 3 Query for Japan: true Query for Dummy: false Error Count: 0 Error Count Prefix: 0 Error Count Postfix: 0 Error Count Post-Pre: 0 Error Count Random: 0 *****Params : slots = 2224 no#hash = 2 cardinality = 359 useSeed = true murmurVersion = 3 Query for Japan: true Query for Dummy: false Error Count: 18 Error Count Prefix: 22 Error Count Postfix: 12 Error Count Post-Pre: 13 Error Count Random: 14 *****Params : slots = 2224 no#hash = 7 cardinality = 1027 useSeed = true murmurVersion = 3 Query for Japan: true Query for Dummy: false Error Count: 0 Error Count Prefix: 0 Error Count Postfix: 3 Error Count Post-Pre: 8 Error Count Random: 6 *****Params : slots = 2224 no#hash = 17 cardinality = 1727 useSeed = true murmurVersion = 3 Query for Japan: true Query for Dummy: false Error Count: 8 Error Count Prefix: 6 Error Count Postfix: 8 Error Count Post-Pre: 3 Error Count Random: 6 *****Params : slots = 582 no#hash = 3 cardinality = 389 useSeed = true murmurVersion = 3 Query for Japan: true Query for Dummy: false Error Count: 52 Error Count Prefix: 63 Error Count Postfix: 49 Error Count Post-Pre: 49 Error Count Random: 56 *****Params : slots = 10000 no#hash = 7 cardinality = 1332 useSeed = false murmurVersion = 3 Query for Japan: true Query for Dummy: false Error Count: 0 Error Count Prefix: 0 Error Count Postfix: 0 Error Count Post-Pre: 0 Error Count Random: 0 *****Params : slots = 2224 no#hash = 2 cardinality = 383 useSeed = false murmurVersion = 3 Query for Japan: true Query for Dummy: false Error Count: 12 Error Count Prefix: 4 Error Count Postfix: 10 Error Count Post-Pre: 5 Error Count Random: 5 *****Params : slots = 2224 no#hash = 7 cardinality = 1060 useSeed = false murmurVersion = 3 Query for Japan: true Query for Dummy: false Error Count: 2 Error Count Prefix: 0 Error Count Postfix: 3 Error Count Post-Pre: 0 Error Count Random: 1 *****Params : slots = 2224 no#hash = 17 cardinality = 1782 useSeed = false murmurVersion = 3 Query for Japan: true Query for Dummy: false Error Count: 4 Error Count Prefix: 6 Error Count Postfix: 7 Error Count Post-Pre: 6 Error Count Random: 5 *****Params : slots = 582 no#hash = 3 cardinality = 392 useSeed = false murmurVersion = 3 Query for Japan: true Query for Dummy: false Error Count: 59 Error Count Prefix: 59 Error Count Postfix: 57 Error Count Post-Pre: 60 Error Count Random: 57